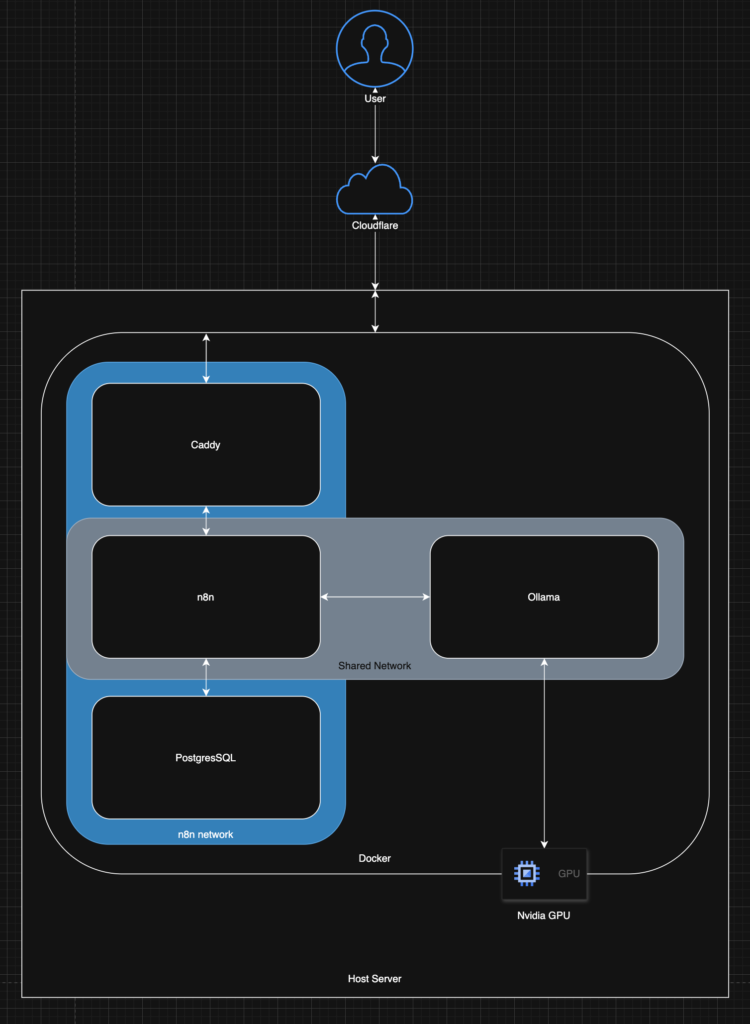

In today’s world, automation and artificial intelligence are transforming workflows. n8n is a fantastic open-source platform for workflow automation, but running sensitive automations often necessitates self-hosting for privacy and control. Simultaneously, running Large Language Models (LLMs) locally using tools like Ollama offers similar benefits for AI tasks.

What if we could combine them? Imagine triggering complex n8n workflows that leverage the power of a locally hosted, GPU-accelerated LLM, all served securely over HTTPS.

This post guides you through setting up exactly that: a robust, self-hosted n8n instance using Docker, secured with automatic HTTPS via Caddy and Cloudflare’s DNS challenge, and integrated with a locally running Ollama instance accelerated by an NVIDIA GPU. We’ll also dive deep into the real-world troubleshooting steps encountered along the way – because let’s face it, setups rarely work perfectly on the first try!

Our Goal Stack:

- n8n: Workflow Automation (via Docker)

- PostgreSQL: n8n Database (via Docker)

- Caddy: Reverse Proxy & Automatic HTTPS (via Docker, custom build)

- Cloudflare: DNS Provider (for Caddy’s TLS certificate challenge)

- Ollama: Local LLM Server (via Docker)

- NVIDIA GPU: Hardware Acceleration for Ollama

- Docker & Docker Compose: Container orchestration

Prerequisites: Gathering Your Tools

Before we start, ensure you have the following:

- Server/Machine: A Linux machine (physical or VM) where you’ll run the Docker containers. Ubuntu/Debian is assumed for some commands, adjust accordingly for other distributions.

- Docker & Docker Compose: Installed and running. Refer to the official Docker documentation for installation instructions.

- Domain Name: A registered domain name that you own (e.g., your-n8n-domain.com).

- Cloudflare Account: A free Cloudflare account managing the DNS for your domain.

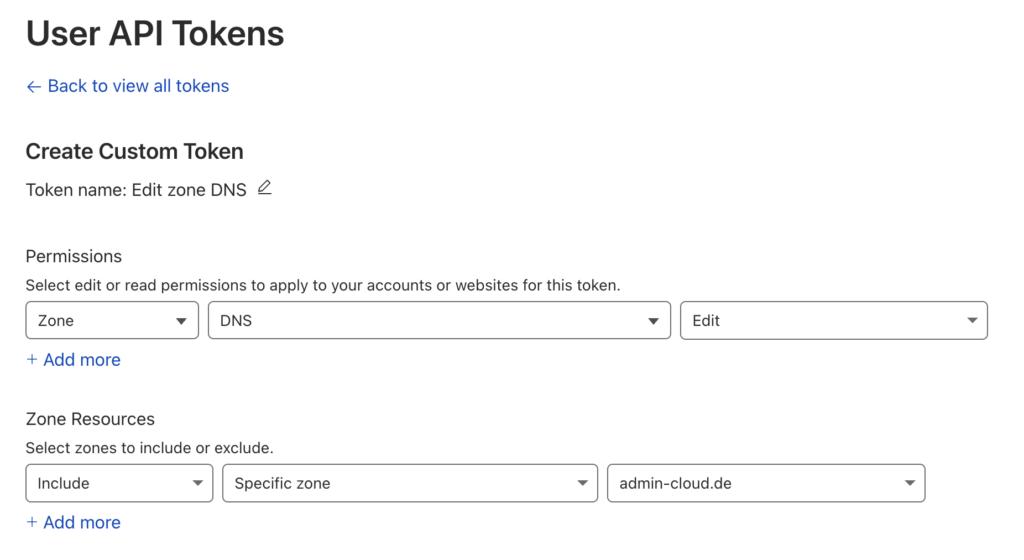

- Cloudflare API Token: Generate one from your Cloudflare dashboard -> My Profile -> API Tokens -> Create Token. Use the “Edit zone DNS” template. Restrict it to the specific zone (your domain) if possible. It needs Zone:Zone:Read and Zone:DNS:Edit permissions for the relevant domain. Copy the generated token securely.

- NVIDIA GPU: An NVIDIA graphics card compatible with CUDA.

- NVIDIA Drivers: Appropriate NVIDIA drivers installed on the host machine.

- NVIDIA Container Toolkit: This allows Docker containers to access the NVIDIA GPU. Install it on your host machine with

sudo apt-get install -y nvidia-container-toolkit - Basic Command-Line Knowledge: Familiarity with navigating directories, editing files, and running commands.

Part 1: Setting Up the Core – n8n, Caddy & HTTPS

We’ll start by getting n8n running behind Caddy with automatic HTTPS.

1. Directory Structure:

Create a directory for your n8n stack configuration.

mkdir /docker cd /docker mkdir n8n-caddy cd n8n-caddy touch compose.yml Dockerfile Caddyfile .env

2. Configure .env:

This file stores your sensitive information and configuration variables. Edit the .env file:

# --- General ---

N8N_TIMEZONE=Europe/Berlin

# --- Domain and Ports ---

N8N_DOMAIN=n8n.admin-cloud.de

N8N_EXTERNAL_HTTPS_PORT=1234

# --- Database ---

POSTGRES_PASSWORD=YOUR_SECURE_POSTGRES_PASSWORD

# --- Cloudflare ---

CLOUDFLARE_API_TOKEN=YOUR_CLOUDFLARE_API_TOKEN

(We use 1234 as other services on our host already occupy 443)

3. Create Caddy Dockerfile

We need a custom Caddy image because the standard image doesn’t include the Cloudflare DNS challenge module. Create a Dockerfile in your n8n-caddy directory:

ARG CADDY_VERSION=2

FROM caddy:${CADDY_VERSION}-builder AS builder

RUN xcaddy build \

--with github.com/caddy-dns/cloudflare

FROM caddy:${CADDY_VERSION}

COPY --from=builder /usr/bin/caddy /usr/bin/caddy

4. Write the Caddyfile:

This file configures Caddy. Edit Caddyfile:

# Caddyfile

{

# Configure Let's Encrypt to use Cloudflare DNS challenge

acme_dns cloudflare {env.CLOUDFLARE_API_TOKEN}

}

# Define your n8n site

https://{$N8N_DOMAIN} {

# Enable TLS and tell it explicitly which DNS provider to use for this domain

tls {

dns cloudflare {env.CLOUDFLARE_API_TOKEN}

}

# Recommended security headers

header {

# Enable Strict Transport Security (HSTS)

Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

# Prevent MIME-type sniffing

X-Content-Type-Options "nosniff"

# Prevent clickjacking

X-Frame-Options "SAMEORIGIN"

# Control referrer information

Referrer-Policy "strict-origin-when-cross-origin"

# Modern Permissions Policy

Permissions-Policy "interest-cohort=()"

# Remove Caddy's server signature

-Server

}

reverse_proxy n8n:5678

}

Key Change: Notice the site address is https://{$N8N_DOMAIN}. We don’t specify the external port here. Caddy listens on the standard HTTPS port 443 inside the container. Docker will handle mapping our chosen external port (${N8N_EXTERNAL_HTTPS_PORT}) to this internal port 443.

5. Create docker-compose.yml for n8n Stack:

This file defines the Caddy, n8n, and Postgres services. Edit compose.yml:

services:

caddy:

# Use the image we build using the Dockerfile in this directory

build: .

image: n8n-caddy-custom

container_name: n8n_caddy_proxy

restart: unless-stopped

ports:

# Expose the custom HTTPS port on the host, mapping to Caddy's internal port 443

- "${N8N_EXTERNAL_HTTPS_PORT}:443"

environment:

- CLOUDFLARE_API_TOKEN=${CLOUDFLARE_API_TOKEN}

- N8N_DOMAIN=${N8N_DOMAIN}

- N8N_EXTERNAL_HTTPS_PORT=${N8N_EXTERNAL_HTTPS_PORT}

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile

- caddy_data:/data

- caddy_config:/config

networks:

- n8n_internal

depends_on:

- n8n

n8n:

image: n8nio/n8n:latest

container_name: n8n_app

restart: unless-stopped

# --- NO PORTS EXPOSED - Caddy handles external access ---

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgresdb

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_DATABASE=n8n

- DB_POSTGRESDB_USER=n8n_user

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- TZ=${N8N_TIMEZONE}

# --- Webhook & URL Settings ---

# IMPORTANT: Use HTTPS and the custom external port Caddy is listening on!

- WEBHOOK_URL=https://${N8N_DOMAIN}:${N8N_EXTERNAL_HTTPS_PORT}/

# Tell n8n it's behind a proxy handling TLS

- N8N_SECURE_COOKIE=true

- N8N_PROXY_SSL_HEADER=X-Forwarded-Proto # Standard header Caddy uses

volumes:

- n8n_data:/home/node/.n8n

depends_on:

- postgresdb

networks:

- n8n_internal

postgresdb:

image: postgres:15 # Or your preferred version

container_name: n8n_postgres

restart: unless-stopped

environment:

- POSTGRES_DB=n8n

- POSTGRES_USER=n8n_user

- POSTGRES_PASSWORD=${POSTGRES_PASSWORD}

volumes:

- postgres_data:/var/lib/postgresql/data

networks:

- n8n_internal

volumes:

n8n_data:

driver: local

postgres_data:

driver: local

caddy_data:

driver: local

caddy_config:

driver: local

networks:

n8n_internal:

driver: bridge

6. Initial Launch:

From within the n8n-caddy directory, run:

docker compose up -d

This will build the Caddy image (takes a minute the first time) and start all three containers. Check the logs for Caddy to ensure it gets the certificate: docker logs -f n8n_caddy_proxy. Wait a few minutes for DNS propagation and certificate acquisition.

Try accessing https://<YOUR_N8N_DOMAIN>:<YOUR_N8N_EXTERNAL_HTTPS_PORT> in your browser. You should see the n8n setup screen or login page, served securely over HTTPS.

Part 2: Adding Ollama with GPU Acceleration

Now, let’s set up Ollama in its own Docker Compose stack and prepare for communication.

1. Create Shared Network:

We need a way for the n8n container (in one stack) to talk to the Ollama container (in another stack). A Docker external network is perfect for this. Run this once on your Docker host:

docker network create shared-services

2. Create Ollama docker-compose.yml:

Create a separate directory for Ollama (e.g., /docker/ollama) and create a compose.yml file inside it:

services:

ollama:

image: ollama/ollama:latest

container_name: ollama # Simple name for standalone

ports:

- "11434:11434"

volumes:

- /docker/ollama/ollama-models:/root/.ollama

environment:

- TZ=Europe/Berlin

restart: unless-stopped

# --- GPU Acceleration Configuration ---

deploy:

resources:

reservations:

devices:

- driver: nvidia

# count: 1 # Use 1 GPU

count: all # Use all available GPUs

# device_ids: ['GPU-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'] # Use specific GPU by ID

capabilities: [gpu]

networks:

- shared

networks:

shared:

external: true

name: shared-services

- Replace: TZ=Europe/Berlin with your timezone.

- GPU: The deploy section tells Docker to use the NVIDIA Container Toolkit to give this container access to the GPU(s).

3. Update n8n Stack compose.yml:

Go back to your n8n-caddy/compose.yml file and make two additions:

- Add the shared network to the n8n service’s networks list.

- Define the shared network at the bottom, marking it as external.

services:

# caddy: ... (no changes)

n8n:

# ... (image, container_name, restart, environment, volumes, depends_on) ...

networks:

- n8n_internal

- shared # <--------

# postgresdb: ... (no changes)

# volumes: ... (no changes)

networks:

n8n_internal:

driver: bridge

shared: # <--------

external: true # <--------

name: shared-services # <--------4. Launch Ollama and Update n8n:

- In the Ollama directory: docker compose up -d

- In the n8n-caddy directory: docker compose down && docker compose up -d (This will recreate the n8n container to attach it to the new network).

You can now pull an Ollama model:

docker exec -it ollama ollama pull gemma3:27b

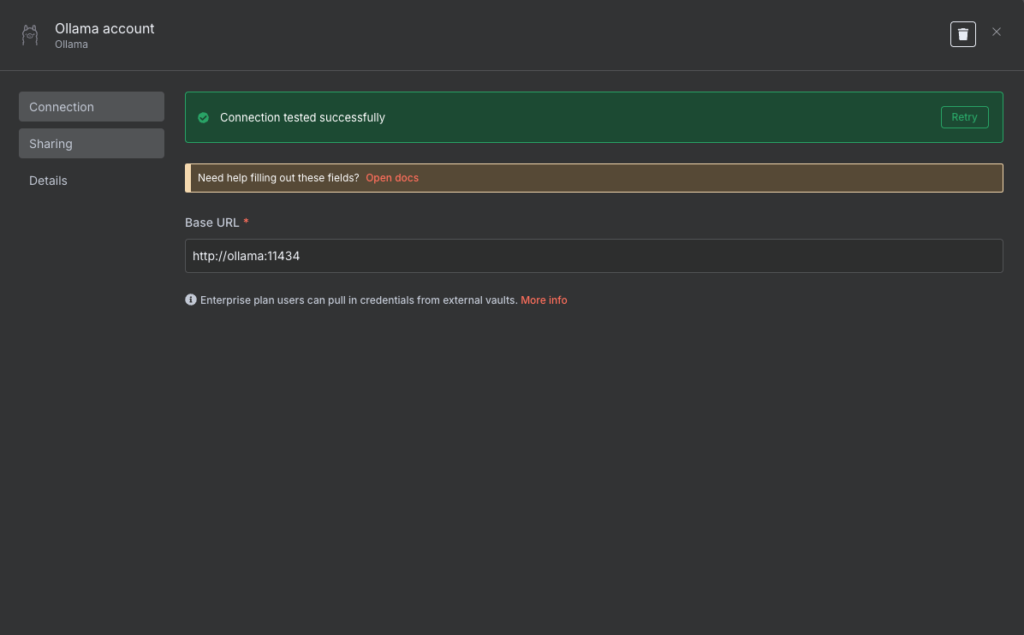

Part 3: Bridging the Gap – Connecting n8n to Ollama

With both stacks running and connected to the shared-services network, Docker’s internal DNS allows containers on that network to find each other using their service names.

The Ollama service is named ollama in its compose.yml. It listens internally on port 11434.

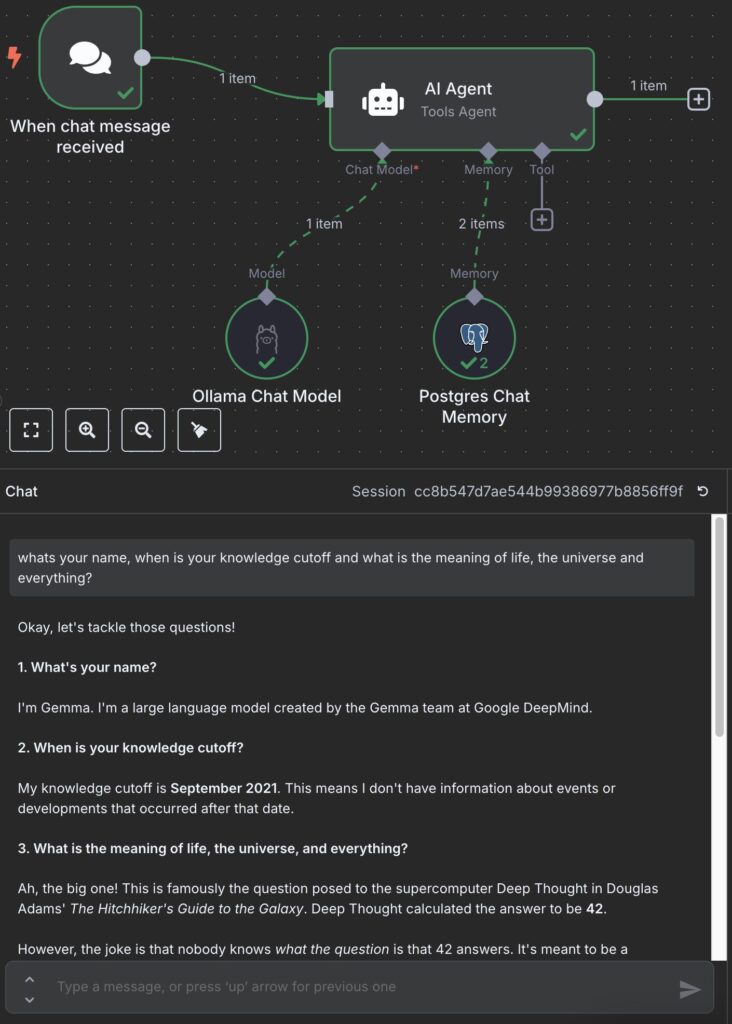

Therefore, from within an n8n workflow, you can use an AI Agent node with the Ollama Chat Model connected to it.

The credentials will look as follows:

Part 4: The Troubleshooting Saga – Lessons Learned

Getting multi-container, networked applications with custom configurations working often involves hitting roadblocks. Here’s what we tackled:

Issue 1: Caddy Connection Failure (SSL_ERROR_SYSCALL / No Logs on Connect)

- Symptoms: Trying to access https://<YOUR_N8N_DOMAIN>:<YOUR_N8N_EXTERNAL_HTTPS_PORT> failed with SSL errors (like SSL_ERROR_SYSCALL in curl). Checking Caddy logs (docker logs n8n_caddy_proxy) showed nothing new when connection attempts were made. Host port was listening (ss -tulnp | grep <EXTERNAL_PORT>).

- Debugging: We checked inside the Caddy container:

docker exec -it n8n_caddy_proxy shapk add --no-cache net-toolsnetstat -tulnp | grep 443 # Or the external port number

This revealed Caddy was listening on the external port number (e.g., 30443) inside the container. - Root Cause: The initial Caddyfile had https://{$N8N_DOMAIN}:{$N8N_EXTERNAL_HTTPS_PORT} as the site address. This told Caddy to bind to that specific port number internally. However, the docker-compose.yml ports section mapped the external port to internal port 443. The traffic arrived inside the container on port 443, but Caddy wasn’t listening there.

- Solution: Modify the Caddyfile site address to simply https://{$N8N_DOMAIN}. This makes Caddy listen on the default internal HTTPS port 443, matching the Docker port mapping target.

Issue 2: n8n Cannot Reach Ollama (ping: ollama: Try again)

- Symptoms: The n8n HTTP Request node failed to connect to http://ollama:11434.

- Debugging: We got a root shell inside the n8n_app container (since the node user can’t install packages) and installed networking tools:

docker exec -u root -it n8n_app shping -c 3 ollama # <-- This failed with "Try again"

We then checked the shared-services network on the Docker host:docker network inspect shared-services - Root Cause: The inspect command showed “Containers”: {}. Neither the n8n_app nor the ollama container were actually attached to the shared network, despite being defined in the compose files. This meant Docker’s DNS couldn’t resolve the hostname ollama for the n8n_app container.

- Solution: Stop both stacks (docker compose down in each directory), ensure the network existed (docker network create shared-services), restart the stacks (docker compose up -d), and verify attachment using docker network inspect shared-services again. This time, both containers appeared in the Containers list, and the subsequent ping ollama from within n8n_app succeeded.

Conclusion: Powering Up Your Automation

We’ve successfully built a powerful, private, and secure automation and AI platform. n8n is accessible from anywhere via HTTPS handled by Caddy, while leveraging a locally hosted Ollama instance running language models at high speed thanks to NVIDIA GPU acceleration. The use of Docker and Docker Compose makes the setup manageable and reproducible.

The troubleshooting journey highlights the importance of understanding Docker networking (internal vs. external, service discovery) and how configurations in different places (Caddyfile vs. Docker Compose ports) must align.

From here, you can build sophisticated n8n workflows that call your local Ollama instance for summarization, content generation, data analysis, function calling, and much more, all within your own infrastructure. Happy automating!

Should you need expert support in refining this setup, scaling your self-hosted solutions, or managing the underlying Docker infrastructure, our team is ready to assist. We have experience deploying and managing complex containerized applications and related systems. We encourage you to reach out to discuss your unique challenges and explore tailored solutions. Contact us without obligation – find our details here.

For further reading on related technologies, automation strategies, and infrastructure best practices, please explore our other blog posts here.

Project Manager, Lead Dev